Abstract

State classification of objects and their relations is core to many long-horizon tasks, particularly in robot planning and manipulation. However, the combinatorial explosion of possible object-predicate combinations, coupled with the need to adapt to novel real-world environments, makes it a desideratum for state classification models to generalize to novel queries with few examples. To this end, we propose PHIER, which leverages predicate hierarchies to generalize effectively in few-shot scenarios. PHIER uses an object-centric scene encoder, self-supervised losses that infer semantic relations between predicates, and a hyperbolic distance metric that captures hierarchical structure; it learns a structured latent space of image-predicate pairs that guides reasoning over state classification queries. We evaluate PHIER in the CALVIN and BEHAVIOR robotic environments and show that PHIER significantly outperforms existing methods in few-shot, out-of-distribution state classification, and demonstrates strong zero- and few-shot generalization from simulated to real-world tasks. Our results demonstrate that leveraging predicate hierarchies improves performance on state classification tasks with limited data.

State Classification

State classification of objects and relations is essential for a plethora of tasks, from scene understanding to robot planning and

manipulation. Many such long-horizon tasks require accurate and varied state predictions for entities in scenes. For example, planning

for “setting up the table” requires classifying whether the cup is NextTo the plate, whether the utensils are

OnTop of the table, and whether the microwave is Open.

The goal of state classification is to precisely answer such queries about specific entities in an image, and determine whether they

satisfy particular conditions across a range of attributes and relations.

However, the combinatorial space of objects (e.g., cup, plate, microwave) and predicates (e.g., NextTo, OnTop,

Open) gives rise to an explosion of possible object-predicate combinations that is intractable to obtain corresponding

training data for. In addition, real-world systems operating in dynamic environments must generalize to queries with novel predicates,

often with only a few examples. Hence, an essential but difficult consideration for state classification models is to quickly learn to

adapt to out-of-distribution queries.

Method

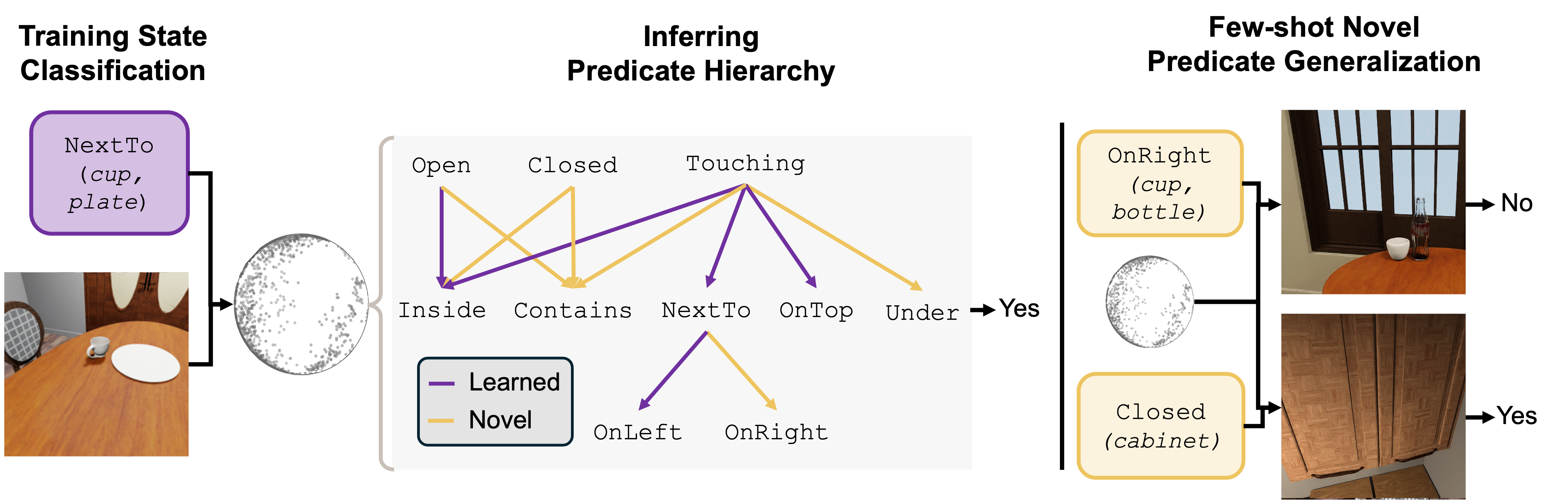

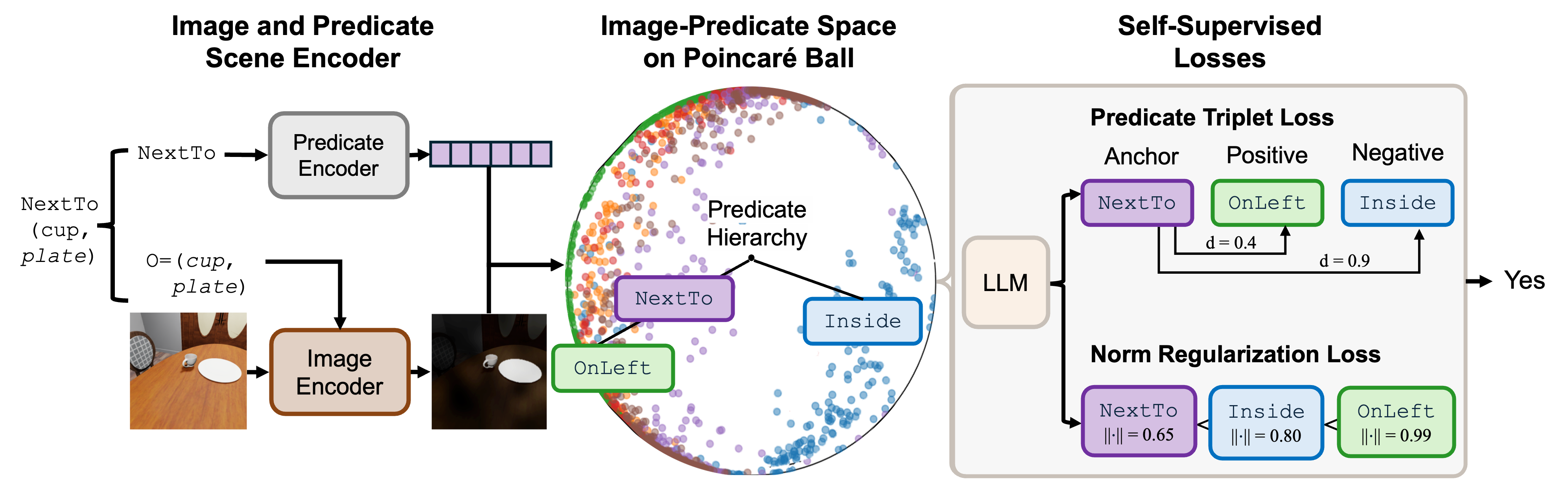

We propose PHIER, a state classification model that leverages the hierarchical structure between predicates to few-shot generalize

to novel queries. At the core of PHIER is an image-predicate latent space trained to encode the relationship between pairwise predicates.

Let us consider the predicates, OnRight and OnLeft they describe opposite spatial relationships between objects,

they are closely related semantically, as assessing them relies on the same underlying features. PHIER enforces image-predicate

representations conditioned on these predicates to lie closer to one another. In addition, there exist predicate pairs with more complex

relationships, such as OnRight and NextTo. We see that OnRight is a more specific case of

NextTo—verifying OnRight involves recognizing whether the objects are NextTo each other. Features

relevant to the higher-level predicate NextTo are therefore useful for reasoning about the lower-level predicate

OnRight. PHIER encodes this predicate hierarchy to allow generalizable state classification.

To perform state classification, PHIER first localizes relevant objects in the input image based on a given query, then leverages an

inferred predicate hierarchy to structure its reasoning over the scene. This is achieved through three main components:

- Object-Centric Scene Encoder: Localizes regions of the image corresponding to relevant entities, ensuring that the model faithfully identifies relevant entities and then learns to focus on their key features for the given predicate's classification.

- Self-Supervised Losses: Encourage the model to encode pairwise predicate relations in its latent space (e.g. OnRight and NextTo are encouraged to be close). The pairwise relations are inferred from language using a LLM.

- Hyperbolic Distance Metric: Encodes the predicate hierarchy in hyperbolic space, leveraging its exponentially growing distances suitable to enable PHIER to effectively model tree-like structure in continuous space.

Results

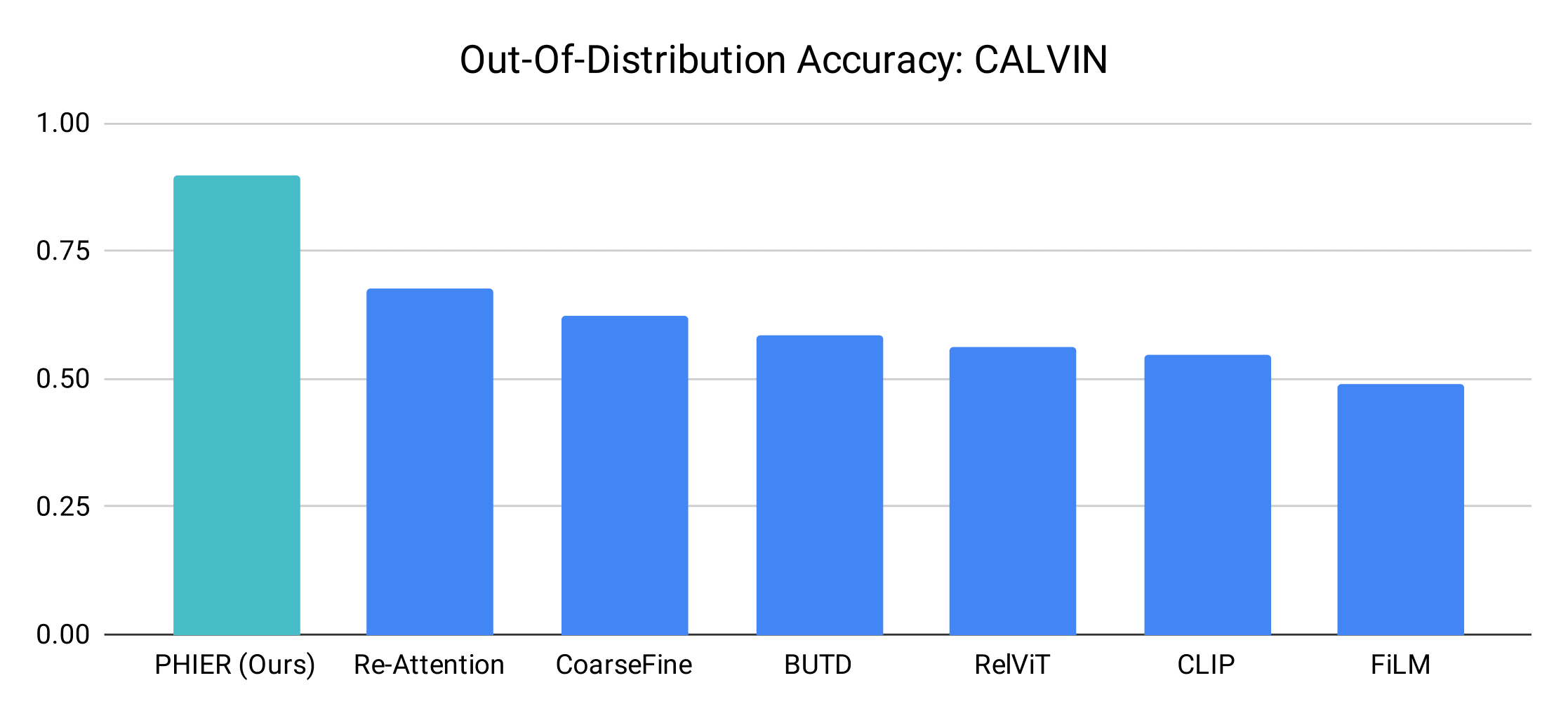

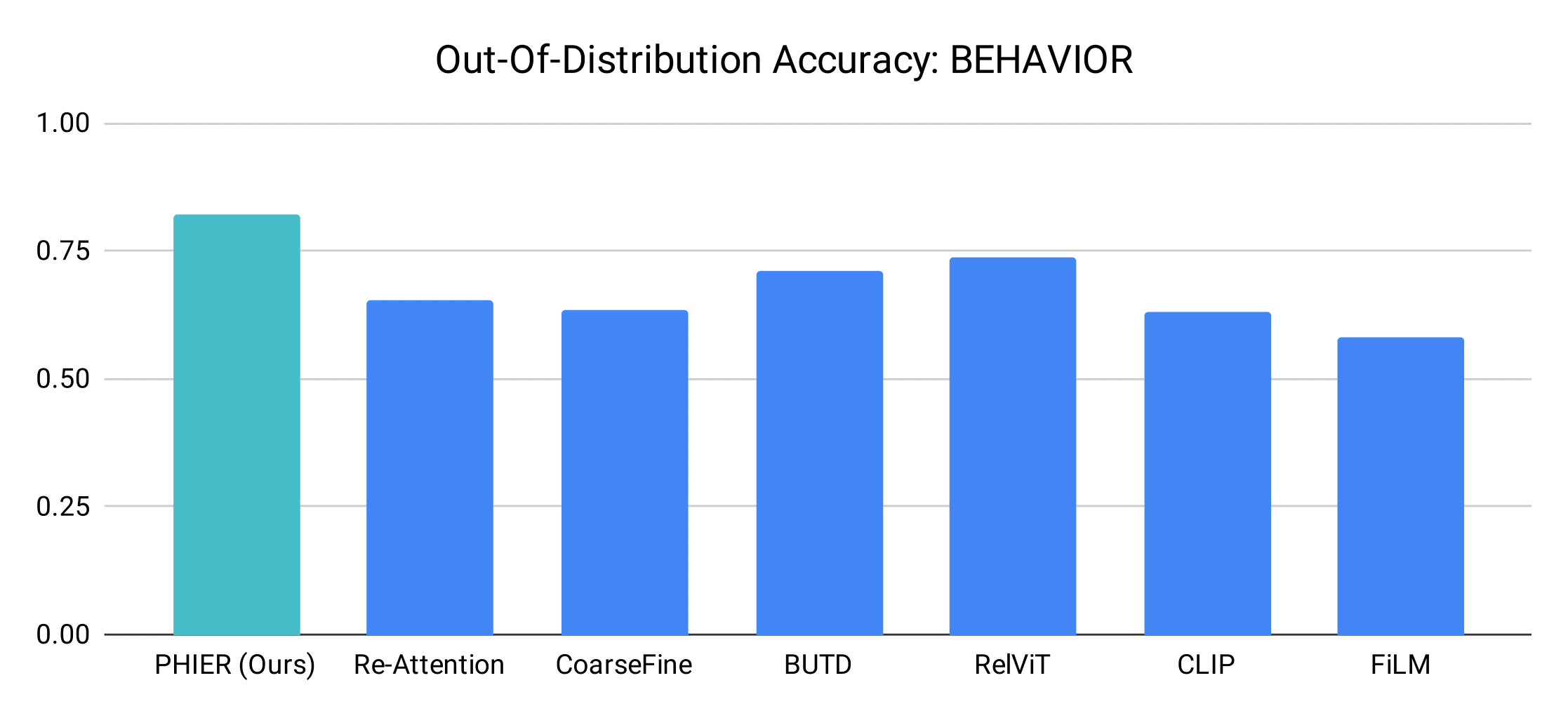

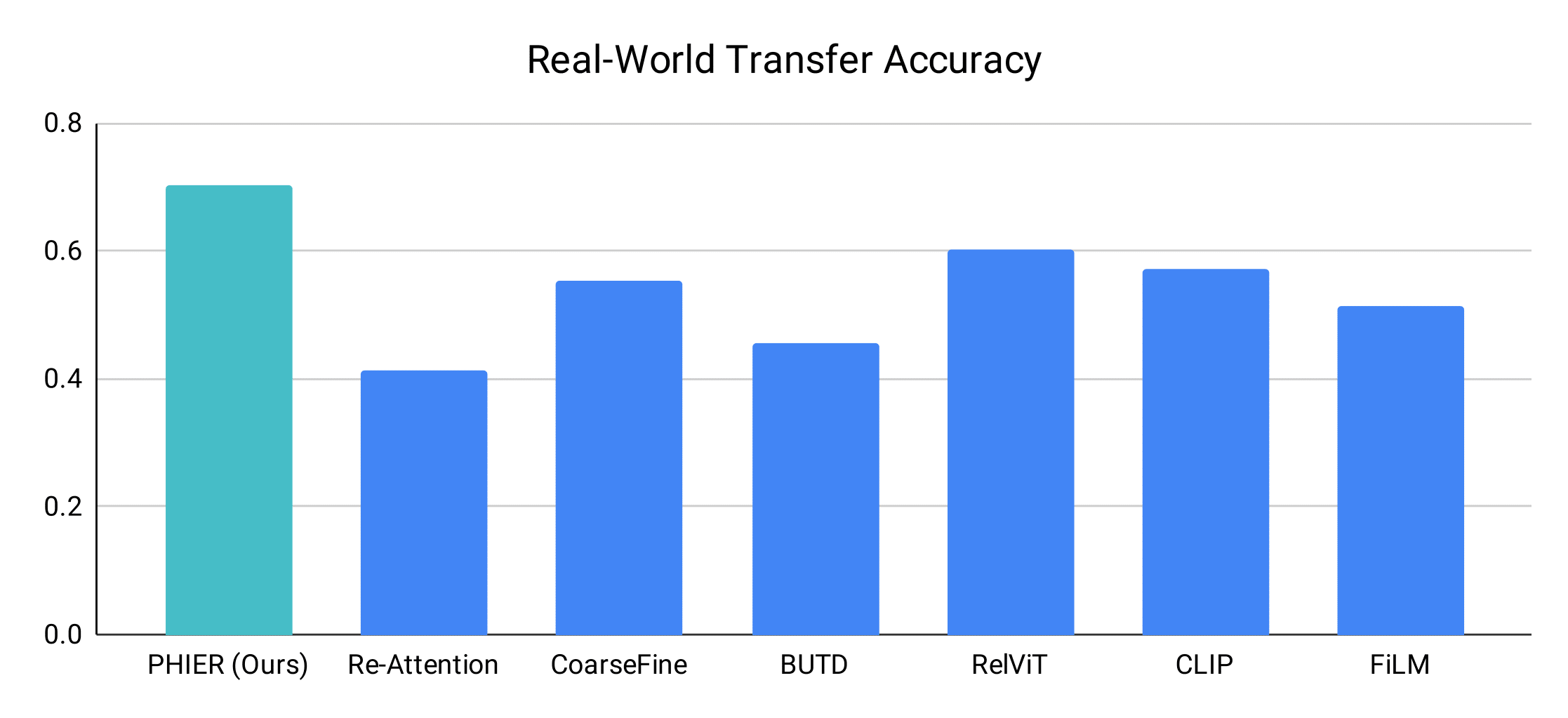

We evaluate PHIER on the state classification task in two robotics environments, CALVIN and BEHAVIOR. Beyond the standard test settings, we focus on few-shot, out-of-distribution tasks involving unseen object-predicate combinations and novel predicates.

PHIER significantly outperforms existing methods on out-of-distribution tasks, including both supervised approaches trained on the same amount of data and inference-only vision-language models (VLMs) trained on large corpora of real-world examples.

PHIER improves upon the top-performing prior work in out-of-distribution tasks by 22.5 percent points on CALVIN and 8.3 percent points on BEHAVIOR.

Notably, trained solely on simulated data, PHIER also outperforms supervised baselines on few-shot generalization to real-world state classification tasks by 10 percent points.

Overall, we see PHIER as a promising solution to few-shot state classification, enabling generalization by leveraging representations grounded in predicate hierarchies.